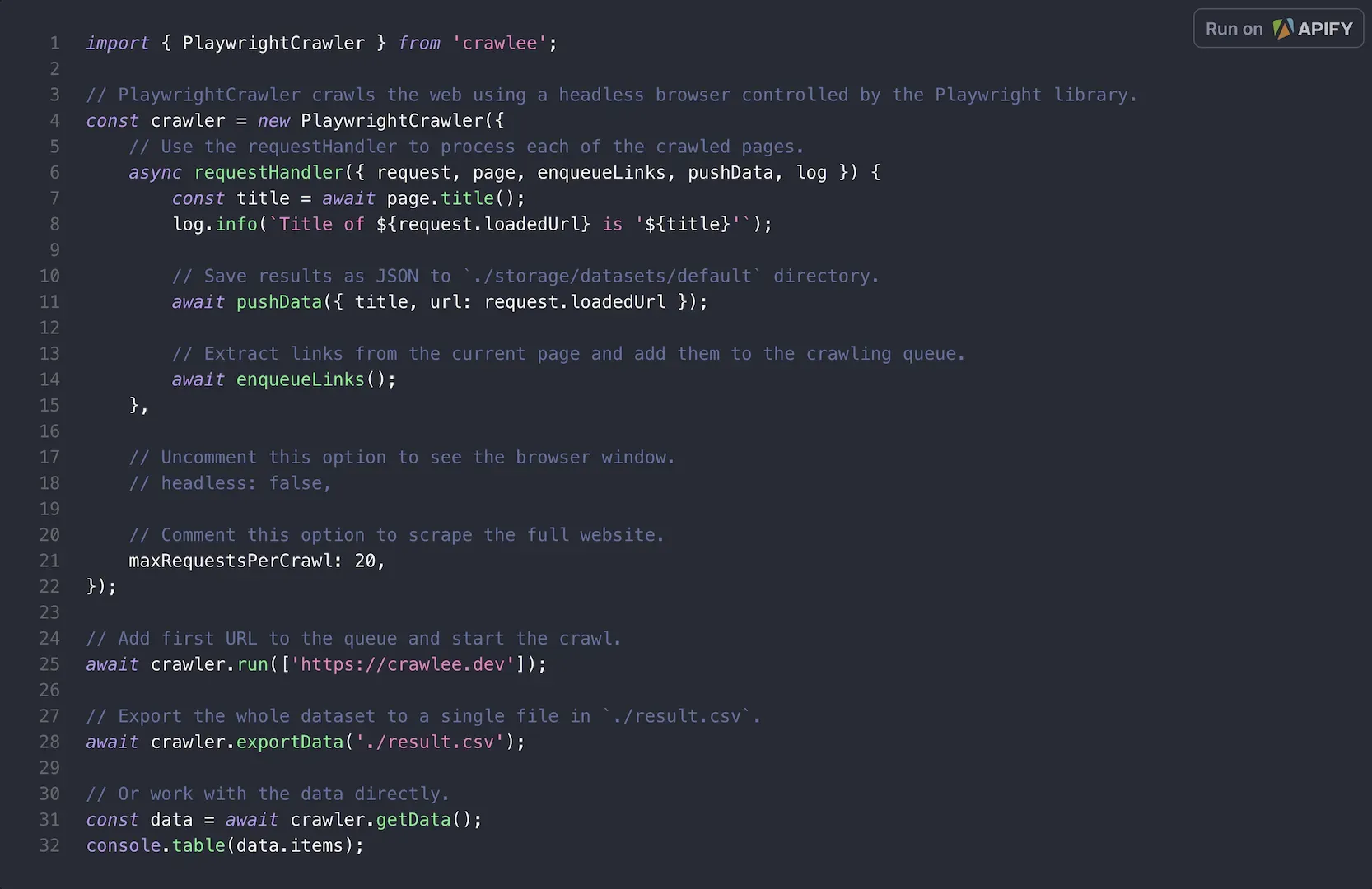

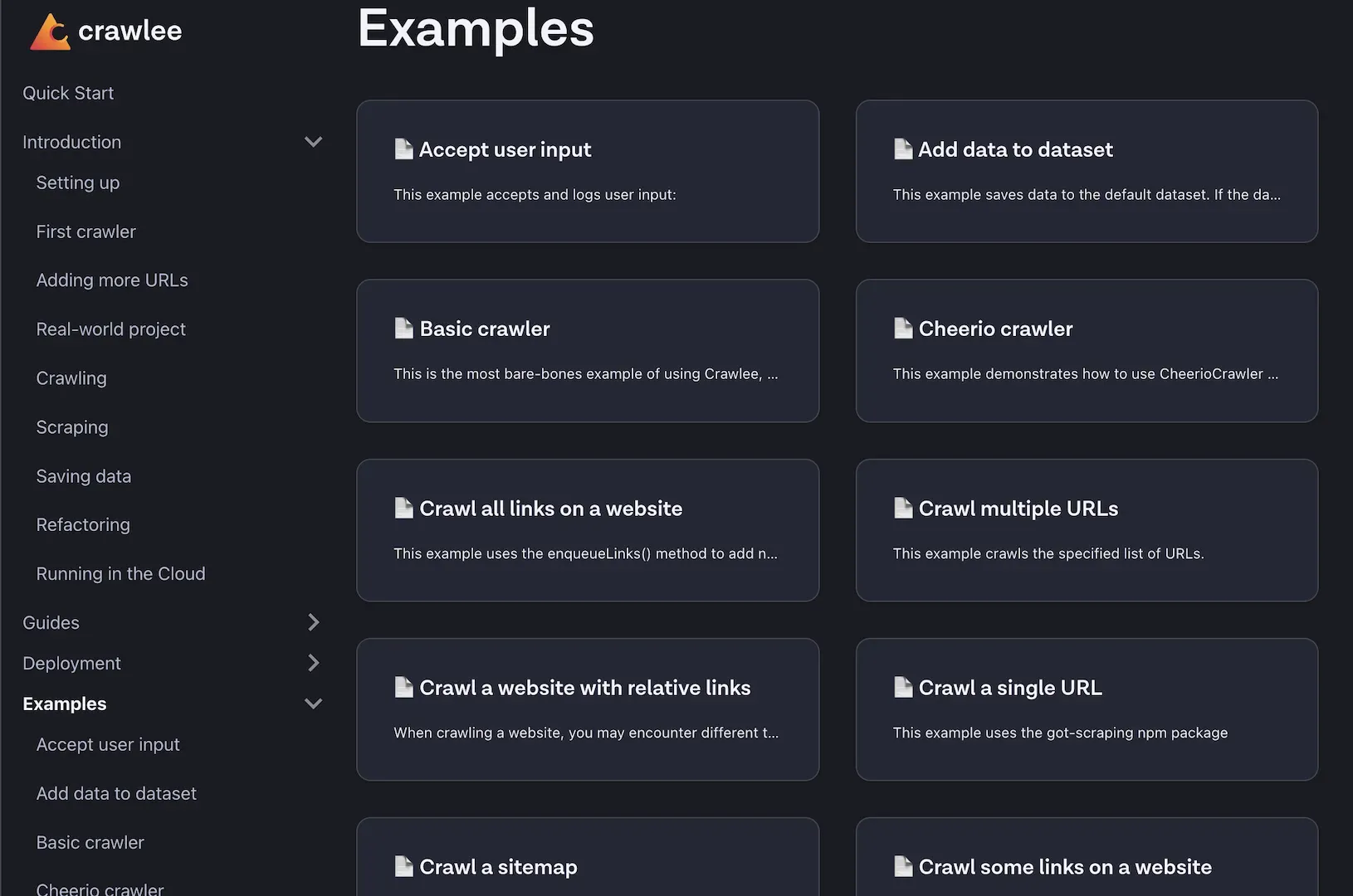

Crawlee is an open-source web scraping and browser automation library built for Node.js and Python. It helps developers create reliable crawlers with minimal effort. The library handles the complex parts of web scraping like proxy rotation, request queuing, and data storage. Crawlee supports both simple HTTP requests and headless browsers, making it versatile for different scraping needs. It's built by people who scrape for a living and used daily to crawl millions of pages.

Rotates proxies intelligently with human-like fingerprints to reduce blocking. Automatically discards problematic proxies.

Includes tools for extracting social handles, phone numbers, infinite scrolling, and blocking unwanted assets.

Choose between HTTP crawling with Cheerio/JSDOM parsers or browser automation with Puppeteer/Playwright for JavaScript-heavy sites.

Built-in request queue ensures URL uniqueness and preserves progress. Includes dataset storage for saving structured results.

Mimics browser headers and TLS fingerprints with automatic rotation based on real-world traffic patterns.

Manages concurrency based on available system resources to optimize performance without overloading your machine.

Collect structured data from websites for analysis, research, or integration with other systems.

Use browser automation capabilities to test web applications across different scenarios.

Track changes on websites and collect updates automatically for monitoring competitors or market changes.

Gather pricing, product information, and other competitive data from multiple sources automatically.

Extract contact information and business details from websites for sales and marketing purposes.

Free and open-source. Cloud deployment on Apify platform has separate pricing tiers.