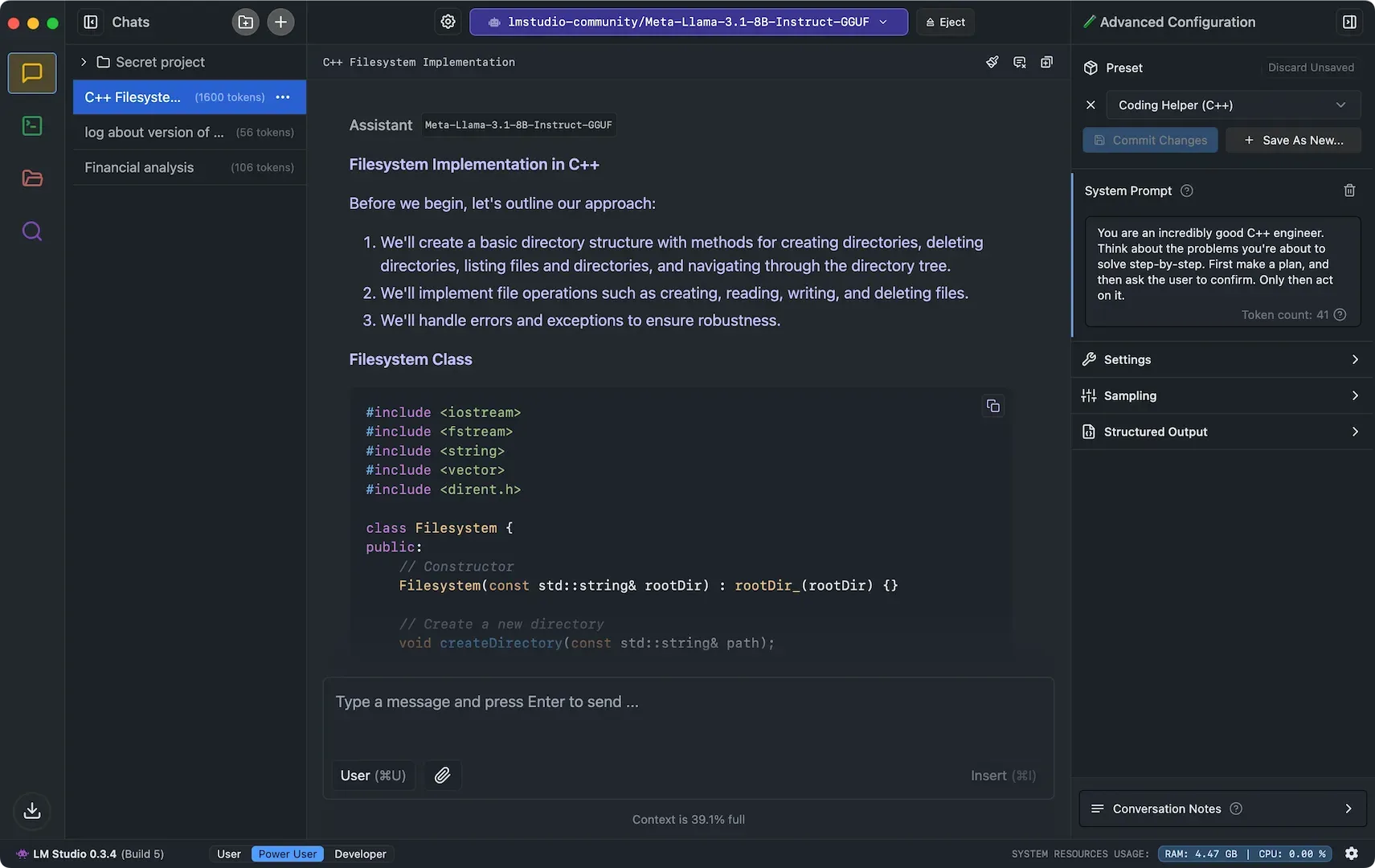

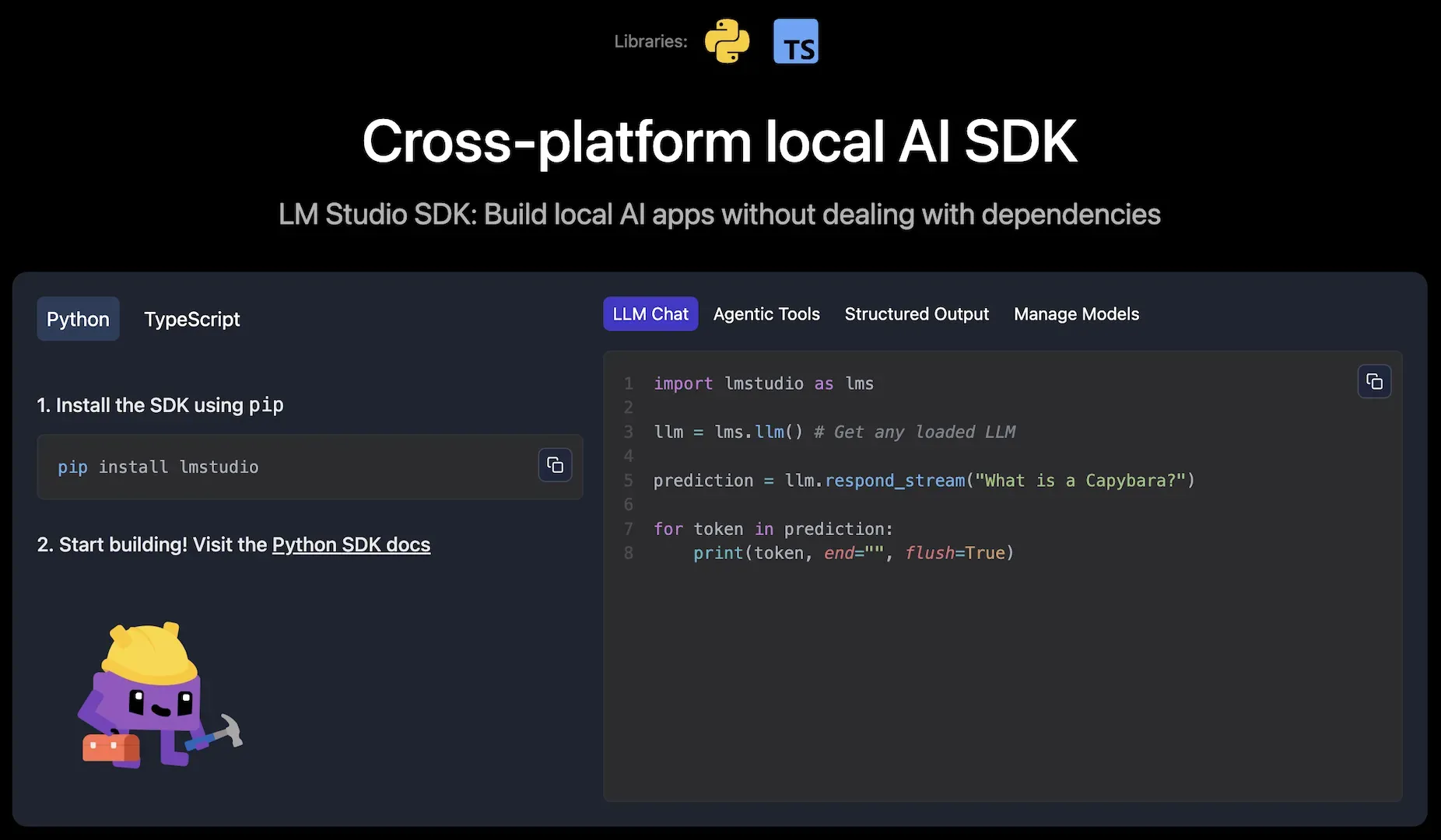

LM Studio lets you run powerful language models on your own hardware. It works offline. Your data stays on your machine. You download models from a catalog, chat with them, or set up a local server to power your apps. It supports document chat through RAG and offers SDKs for Python and JavaScript developers.

Run large language models directly on your laptop or PC without sending data to external servers.

Browse and download open-source models from Hugging Face in GGUF format (all platforms) and MLX format (Mac only).

Set up a local server with an OpenAI-compatible API to power your applications with local AI.

Chat with your local files using Retrieval Augmented Generation (RAG) for private document analysis.

Enable models to request function calls to external tools and APIs through the chat completions endpoint.

Build AI applications with complete data privacy, keeping sensitive information on your local machine.

Test different models, parameters, and prompts without cloud costs or API rate limits.

Process and query documents locally without uploading them to external services.

Create applications that use LLMs to call external functions and APIs based on user input.

Free to download and use. Enterprise options available through "LM Studio @ Work" request form.