Ollama is a command-line tool that lets users run large language models (LLMs) locally. It provides easy access to powerful AI models like Llama 4, DeepSeek, Mistral, and others through simple commands. Users can chat with models, use them for tasks via API, and even leverage tool calling features to extend functionality.

Run LLMs completely on your own hardware without sending data to external services.

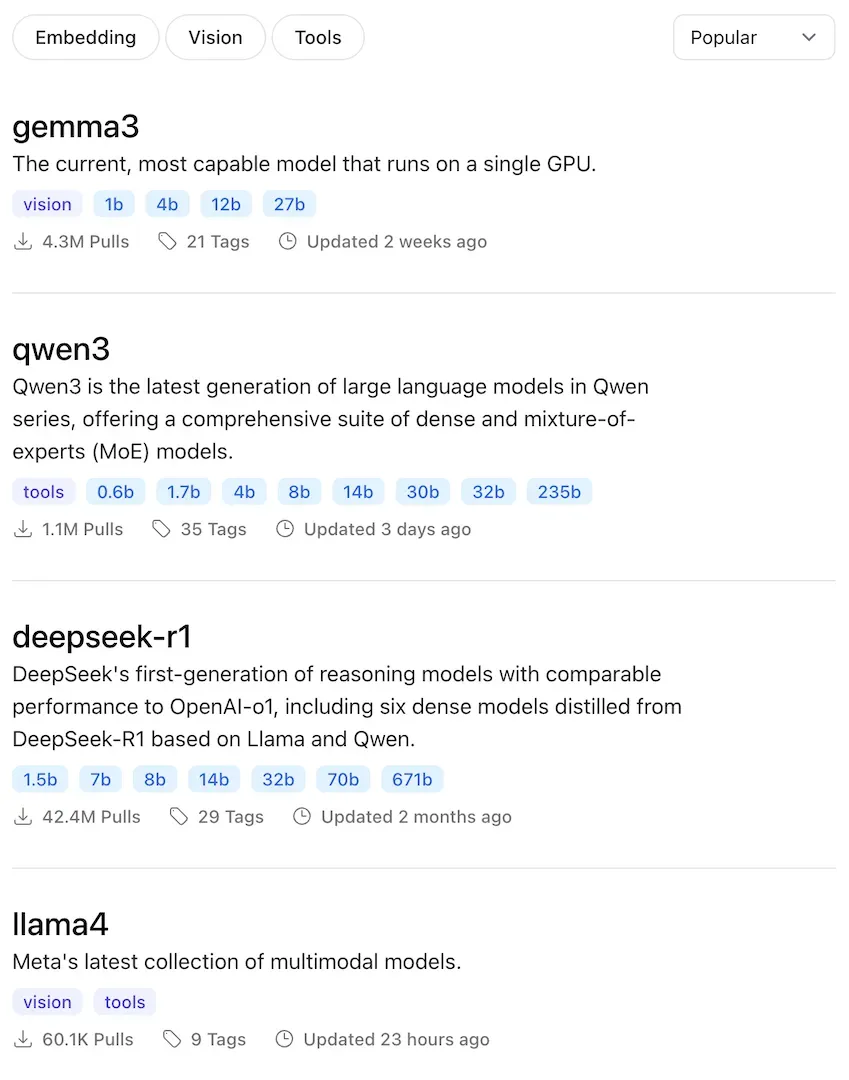

Access a variety of models including Llama 4, DeepSeek, Mistral, and specialized models for specific tasks.

Connect models to external tools and APIs, enabling them to check weather, browse the web, or run code.

Interact with models programmatically through REST API with OpenAI compatibility.

Create specialized model behaviors through custom system prompts and parameters.

Develop and test AI applications locally before deploying to production environments.

Experiment with different LLMs and parameters without cloud dependencies.

Create text, code, and creative content using natural language prompts.

Use LLMs to help analyze and interpret complex data through natural language queries.

Run a personal assistant locally with full privacy for sensitive information.

Free and open-source. No subscription required. Run privately on your own hardware.